Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

This is a page not in th emain menu

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

We are preparing to use the direct method as in VO and formulate photometric error, I focused on photometric calibration when I learned and ported Direct Sparse Odometry Lite to our drone, which aims to provide localization in the wild and evaluate the importance of photometric calibration. I followed the manual photometric calibration paper from the TUM group and developed code to calculate the camera-response function and vignetting map. The calibration is proved to be useful for relative error metrics and prevents spikes in localization error.

I proved in details the convex relaxation portion of the paper Blind Deconvolution using Convex Program- ming and recreated its results. This project is completed with ECE273-convex optimization and greatly enhanced my understanding. My Instructor is Prof. Piya Pal.

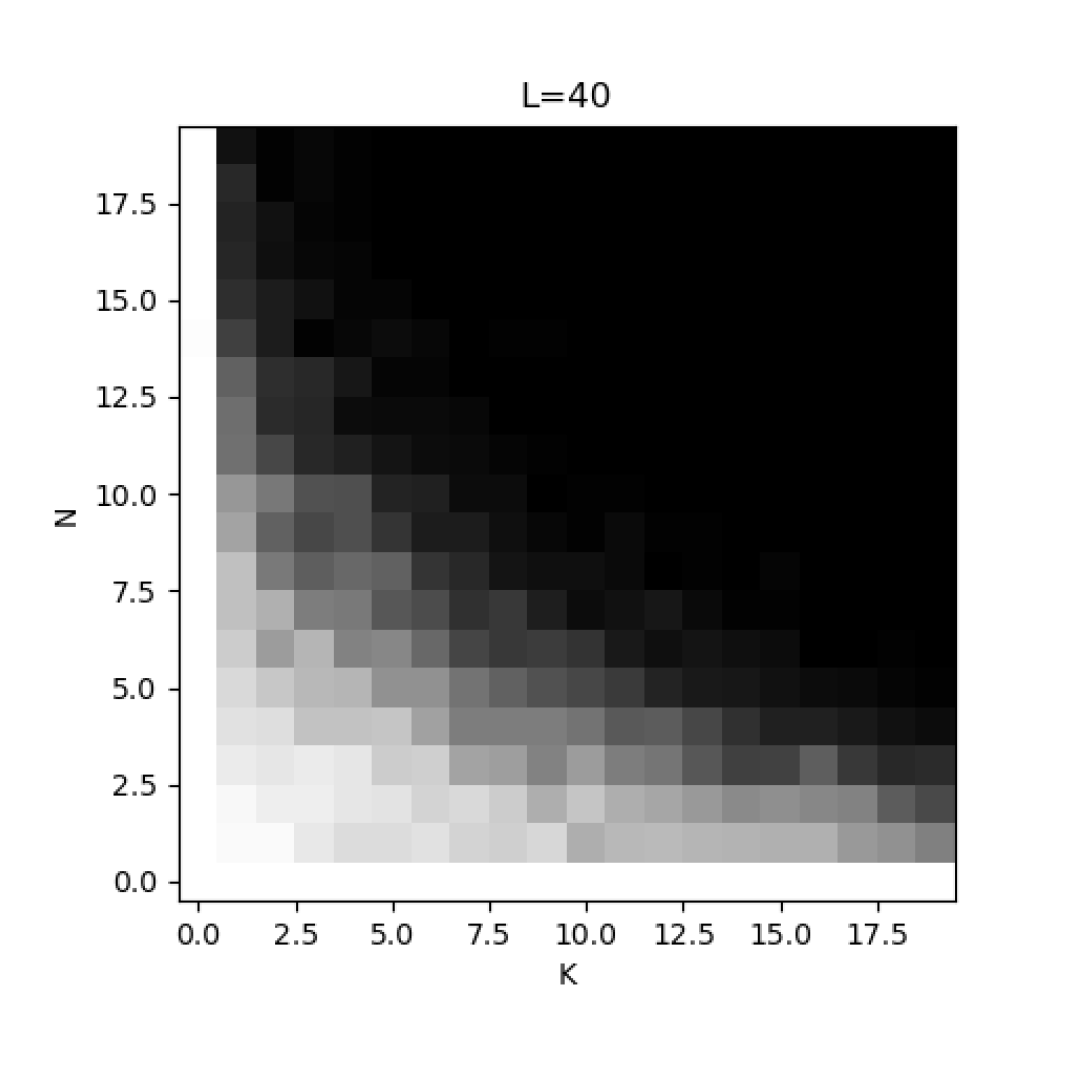

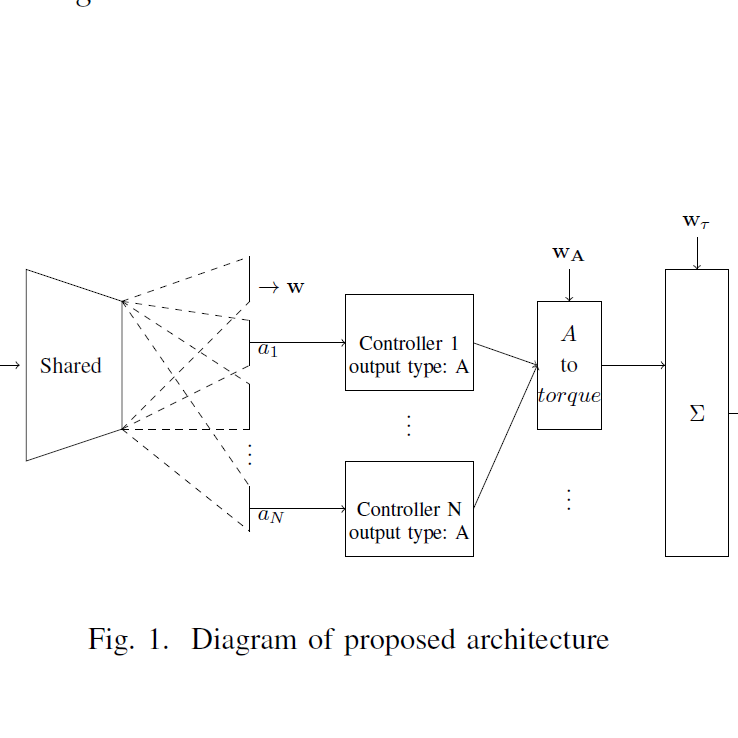

I learned to use Isaac Gym and designed a network architecture that allows a high level network to select and combine appropriate low-level controllers. My network is proven to be beneficial. This is a course project instructed by Prof. Michael Yip.

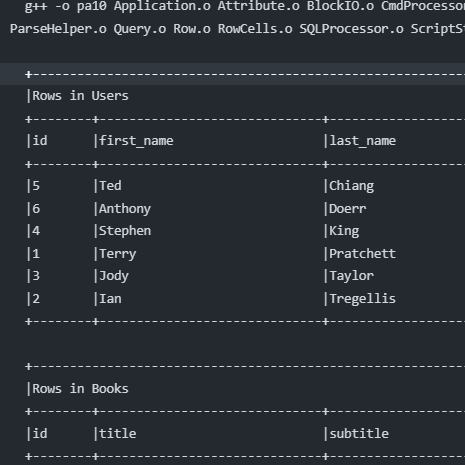

A mini relational database implemented in C++ that supports sql-like commands with indexing and caching.

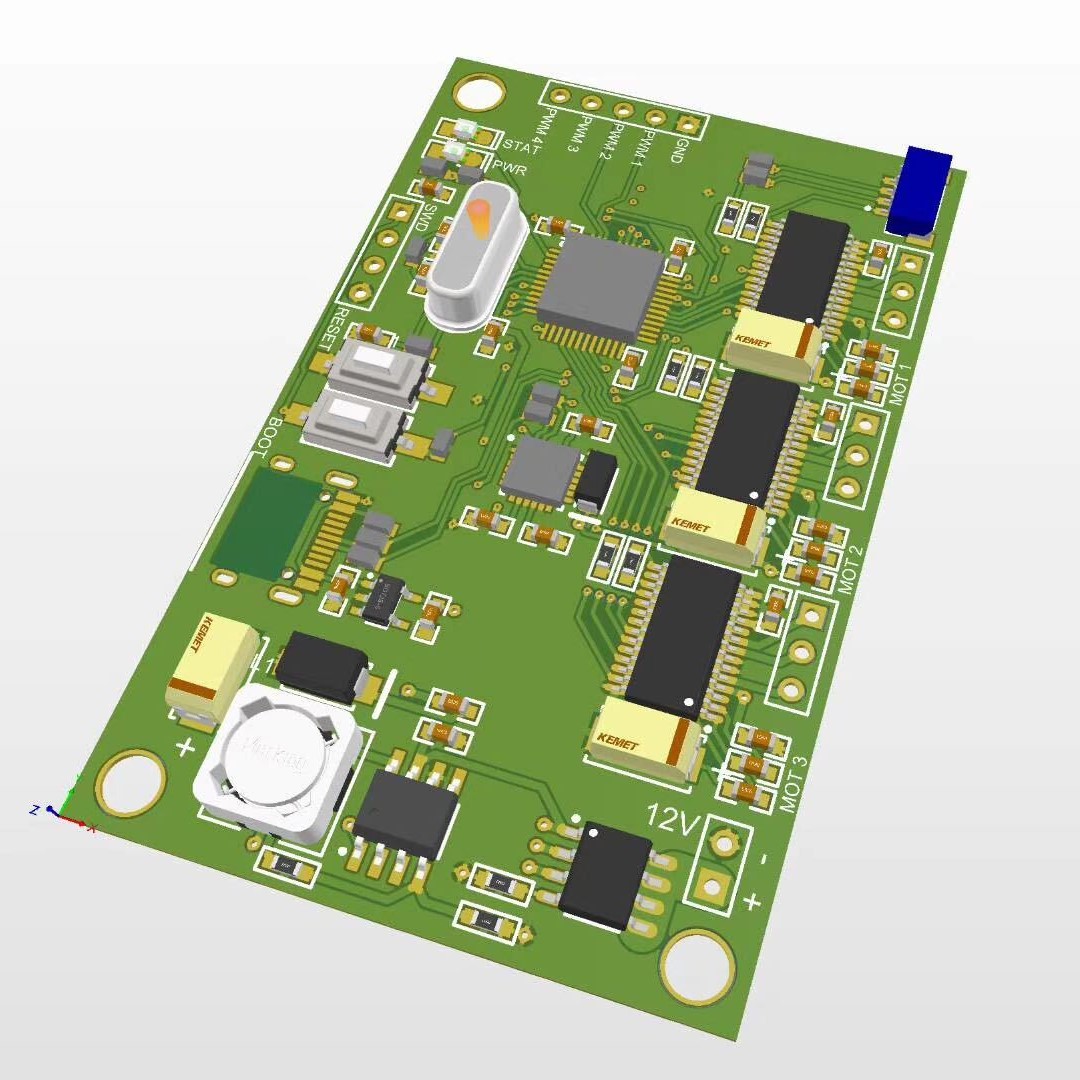

We designed PCB and control algorithm for this quadcopter which is ~14 cm in diagonal. This is a course project instructed by Prof. Steven Swanson.

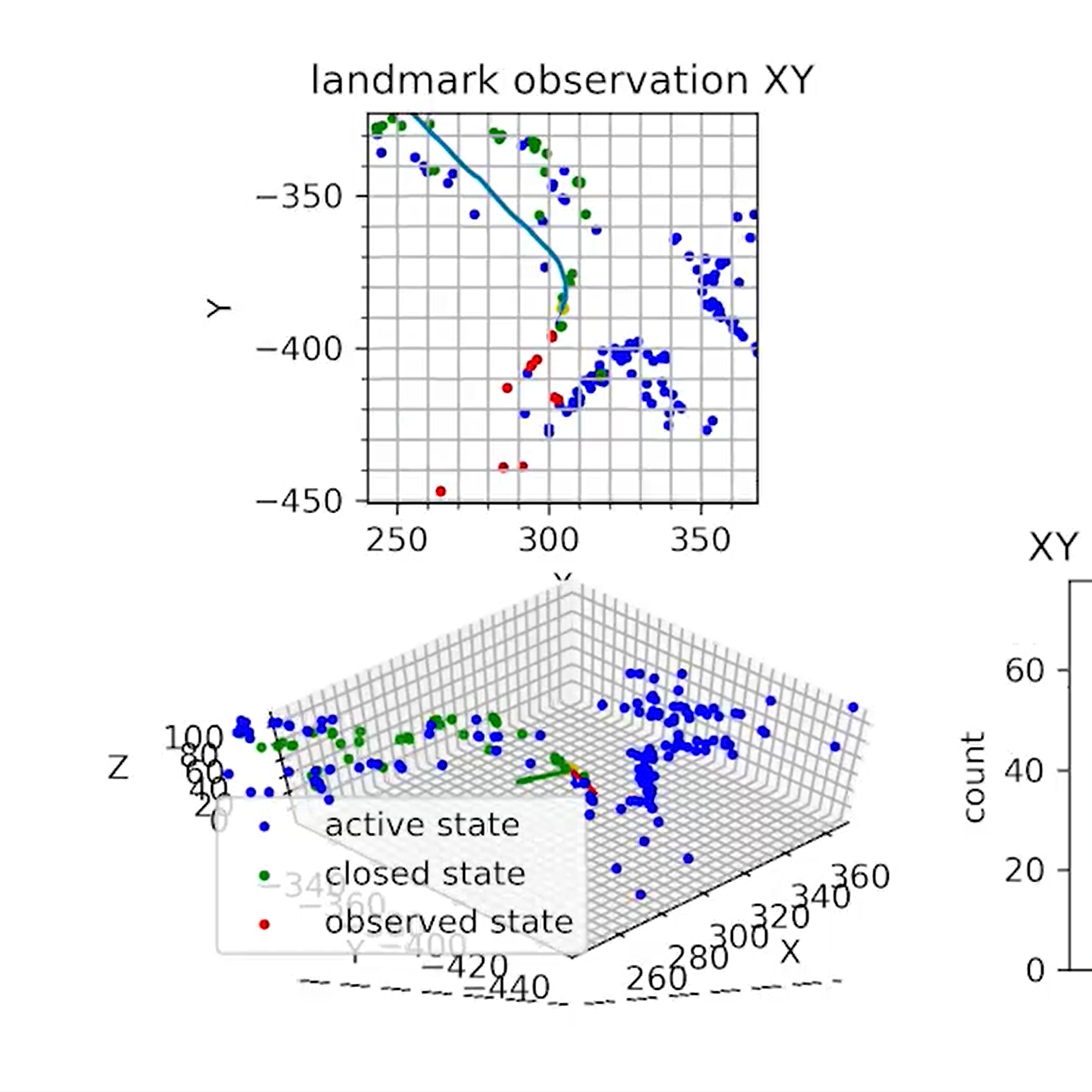

Given stereo camera observation and IMU observations of a real self-driving dataset, I developed a sparse, feature based SLAM algorithm based on EKF and jointly estimation of agent pose and landmark positions. My Instructor is Prof. Nikolay Atanasov.

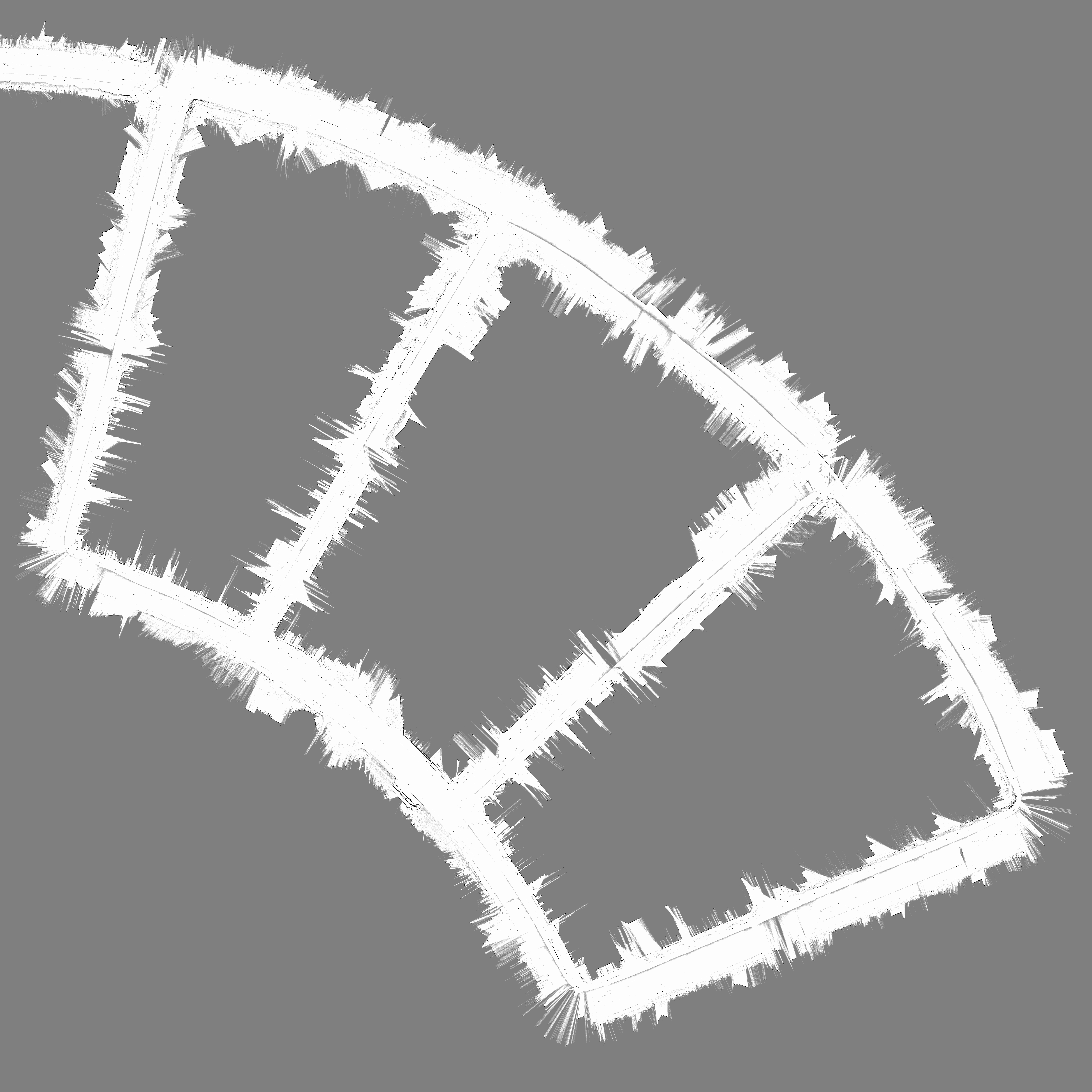

Given LIDAR and IMU observations of a real self-driving dataset, I developed a preliminary(no loop closure) SLAM algorithm based on occupancy map and particle filter. My Instructor is Prof. Nikolay Atanasov.

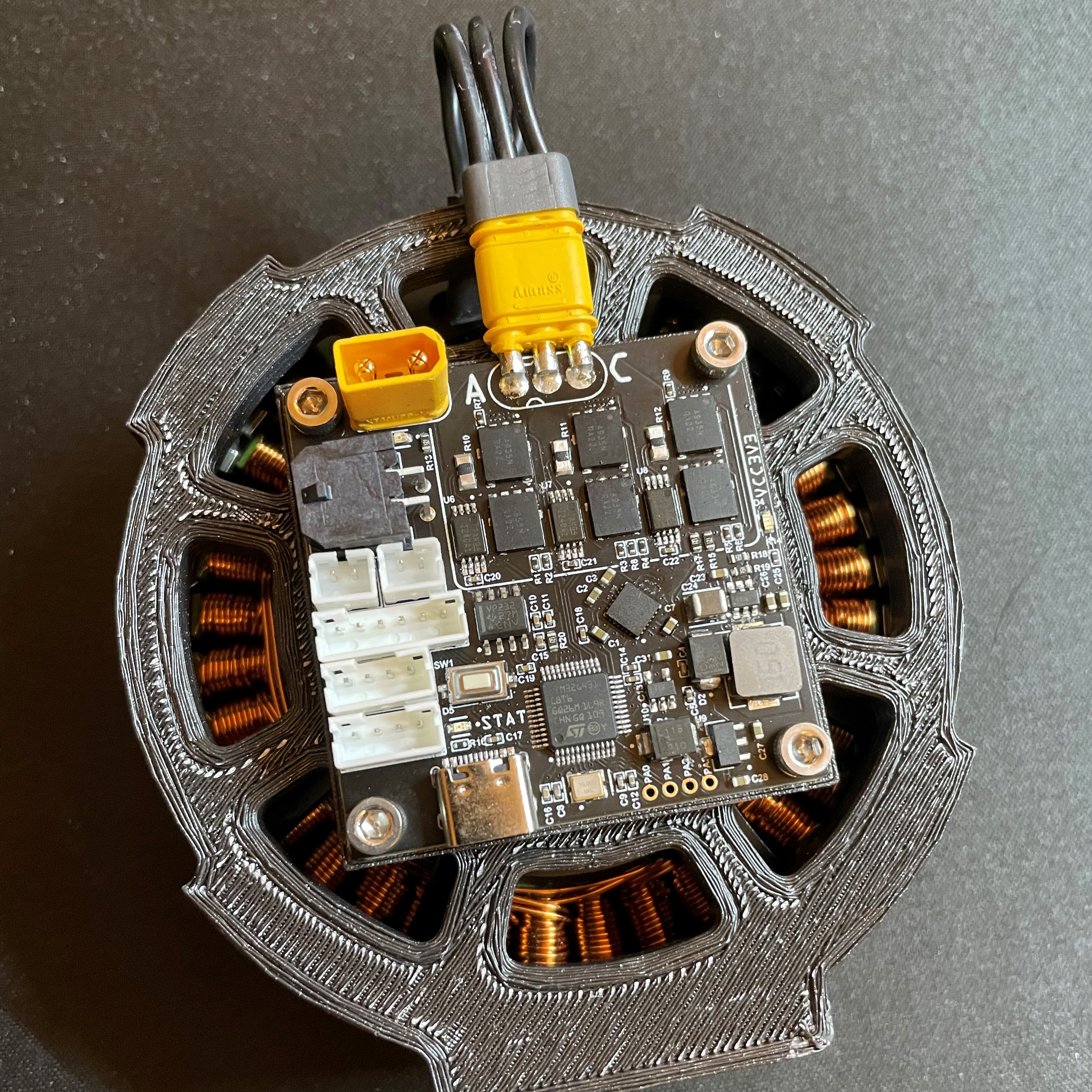

I want to make my powerhouse for my future robotics projects. The controller board senses the rotor's position from a magnetic encoder and modulates three phase voltage accordingly to achieve control goal. I have tuned the current loop to have a bandwidth of 2 kHz, and currently tuning the velocity and position loop. A command interface is also under construction.

This module is designed for localization need of my club Yonder Dynamics. I designed a compact PCB that holds IMU, pressure sensor, GPS, and magnetometer and communicates all information to ROS network through a ported rosserial package. I also learned to use ESKF to fuse all information.

I made a hand-held gimbal for stabilizing my phone when taking videos. The system cotains 3 BLDC motors and 2 IMUs, one at the handle and another attached to the end effector(phone holder). The difference between the IMU are used to calculate joint angles and guide the 3 phase voltage modulation.

Published in IROS 2022, 2022

Developing robust vision-guided controllers for quadrupedal robots in complex environments with various obstacles, dynamical surroundings and uneven terrains is very challenging. While Reinforcement Learning (RL) provides a promising paradigm for agile locomotion skills with vision inputs in simulation, it is still very challenging to deploy the RL policy in the real world. Our key insight is that aside from the discrepancy in the observation domain gap between simulation and the real world, the latency from the control pipeline is also a major cause of the challenge. In this paper, we propose Multi-Modal Delay Randomization (MMDR) to address this issue when training with RL agents. Specifically, we randomize the selections for both the proprioceptive state and the visual observations in time, aiming to simulate the latency of the control system in the real world. We train the RL policy for end2end control in a physical simulator, and it can be directly deployed on the real A1 quadruped robot running in the wild. We evaluate our method in different outdoor environments with complex terrain and obstacles. We show the robot can smoothly maneuver at a high speed, avoiding the obstacles, and achieving significant improvement over the baselines.

Recommended citation: Chieko Imai, Minghao Zhang, Yuchen Zhang, Marcin Kierebinski, Ruihan Yang, Yuzhe Qin, & Xiaolong Wang (2022). Vision-Guided Quadrupedal Locomotion in the Wild with Multi-Modal Delay Randomization. In 2022 IEEE/RSJ international conference on intelligent robots and systems (IROS). https://arxiv.org/abs/2109.14549

Published in IROS 2022, 2025

Dense image correspondence is central to many applications, such as visual odometry, 3D reconstruction, object association, and re-identification. Historically, dense correspondence has been tackled separately for wide-baseline scenarios and optical flow estimation, despite the common goal of matching content between two images. In this paper, we develop a Unified Flow & Matching model (UFM), which is trained on unified data for pixels that are co-visible in both source and target images. UFM uses a simple, generic transformer architecture that directly regresses the (u,v) flow . It is easier to train and more accurate for large flows compared to the typical coarse-to-find cost volumes in prior work. UFM is 28% more accurate than state-of-the-art flow methods (Unimatch), while also having 62% less error and 6.7x faster than dense wide-baseline matchers (RoMa). UFM is the first to demonstrate that unified training can outperform specialized approaches across both domains. This enables fast, general-purpose correspondence and opens new directions for multi-modal, long-range, and real-time correspondence tasks.

Recommended citation: Zhang, Yuchen, Nikhil Keetha, Chenwei Lyu, Bhuvan Jhamb, Yutian Chen, Yuheng Qiu, Jay Karhade et al. "UFM: A Simple Path towards Unified Dense Correspondence with Flow." arXiv preprint arXiv:2506.09278 (2025). https://uniflowmatch.github.io/

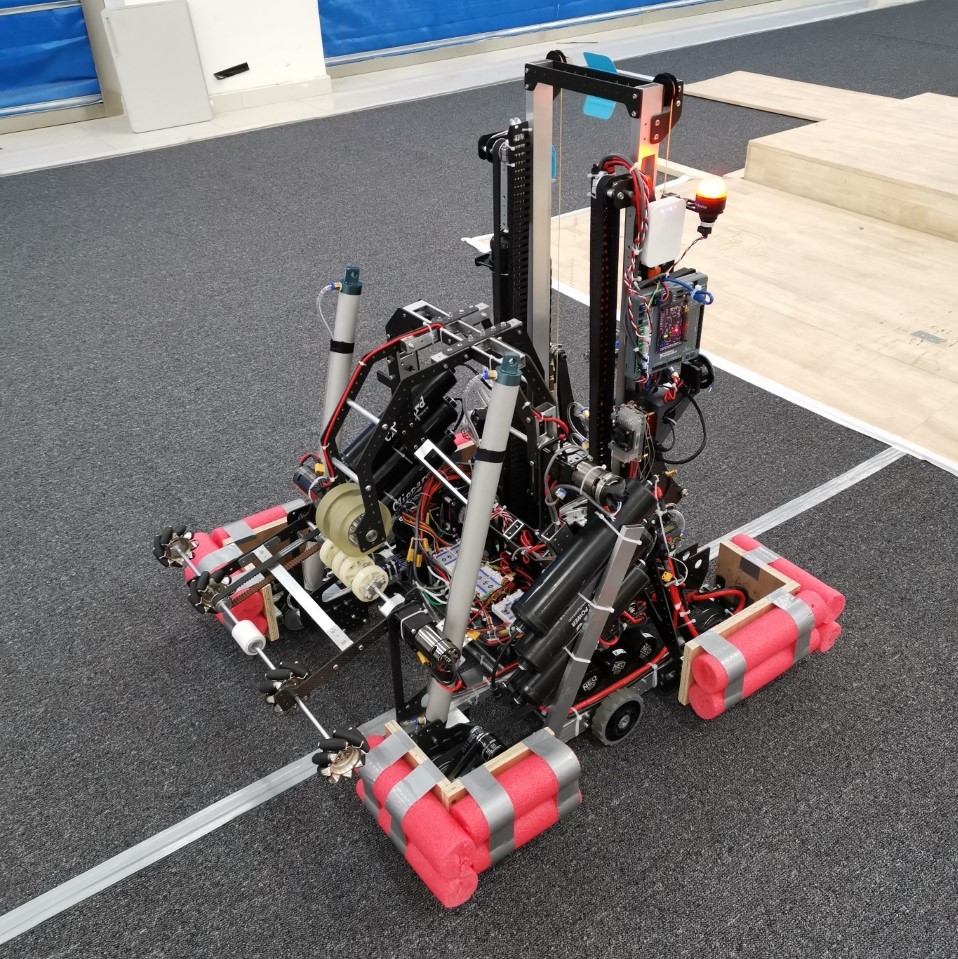

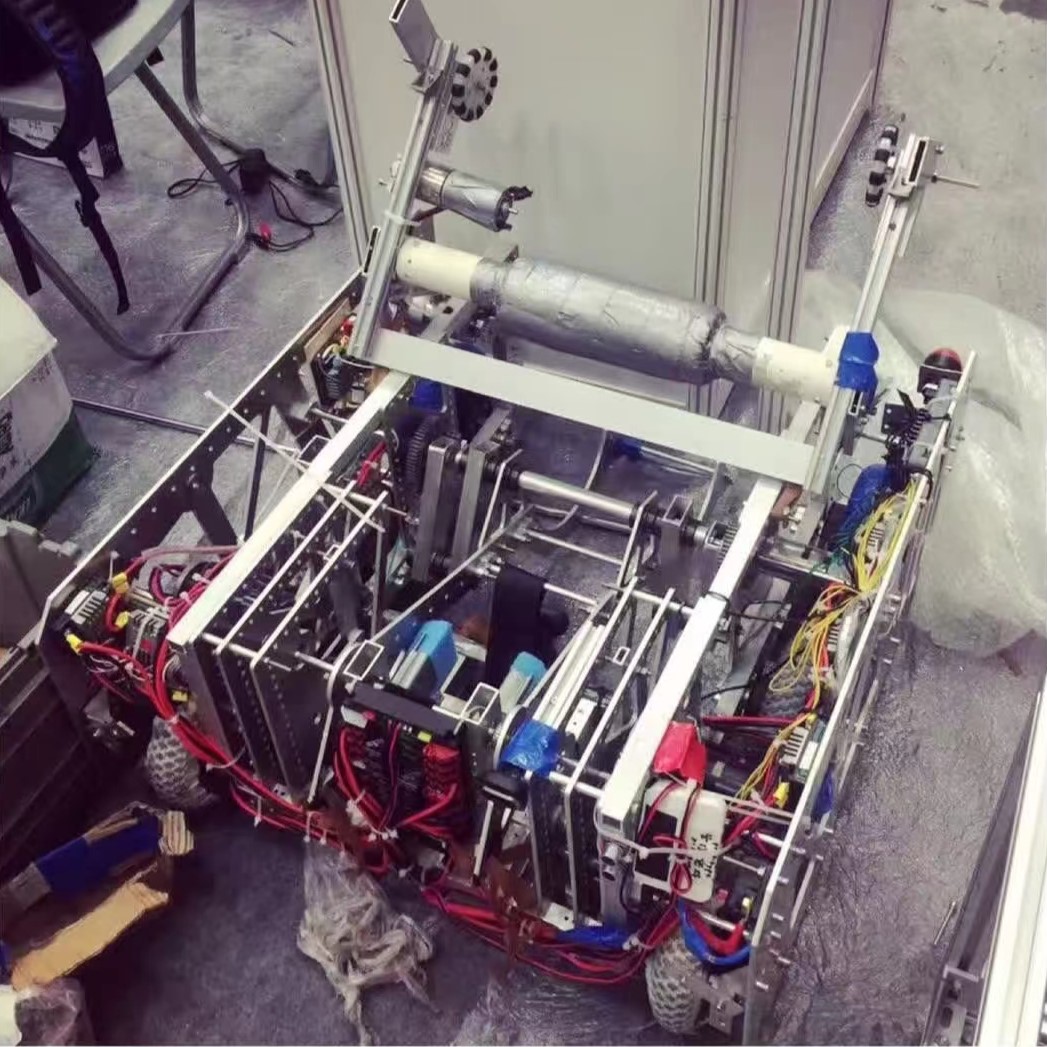

This is our robot for the FRC 2019 competition. It can deliver hatch panels and fuels(big inflated balls) over the competition field. It re-uses the elevator to push its back wheels up the climbing zone and use the giant cylinders to climb. In particular, we achieved autonomous pose drive using a double PID loop.

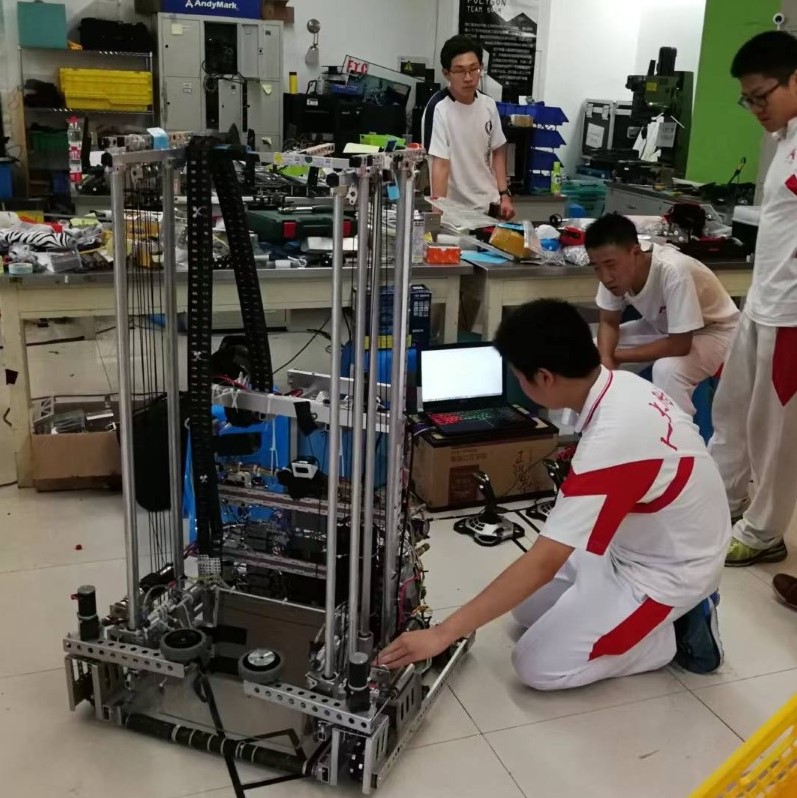

Our robot for the 2018 FRC competition. It can deliver recycle boxes both to ground entrance and lift them up to around 2 meters and dump into a scale. For the game rule it can also hang itself up with a wrench. In this season I developed and installed a compact odometry module on the vehicle for our autonomous. We made it to world championship!

Robot for FRC 2017! Capable of launching balls into a cone that is ~2m above the ground. We used mecanum wheels and I added a gyroscope so that operators can control it in a headless mode.

Robot for 2016 FRC Stronghold. capable of catapulting ball to a target tower. Learned how to segment and track reflection markers using OpenCV.

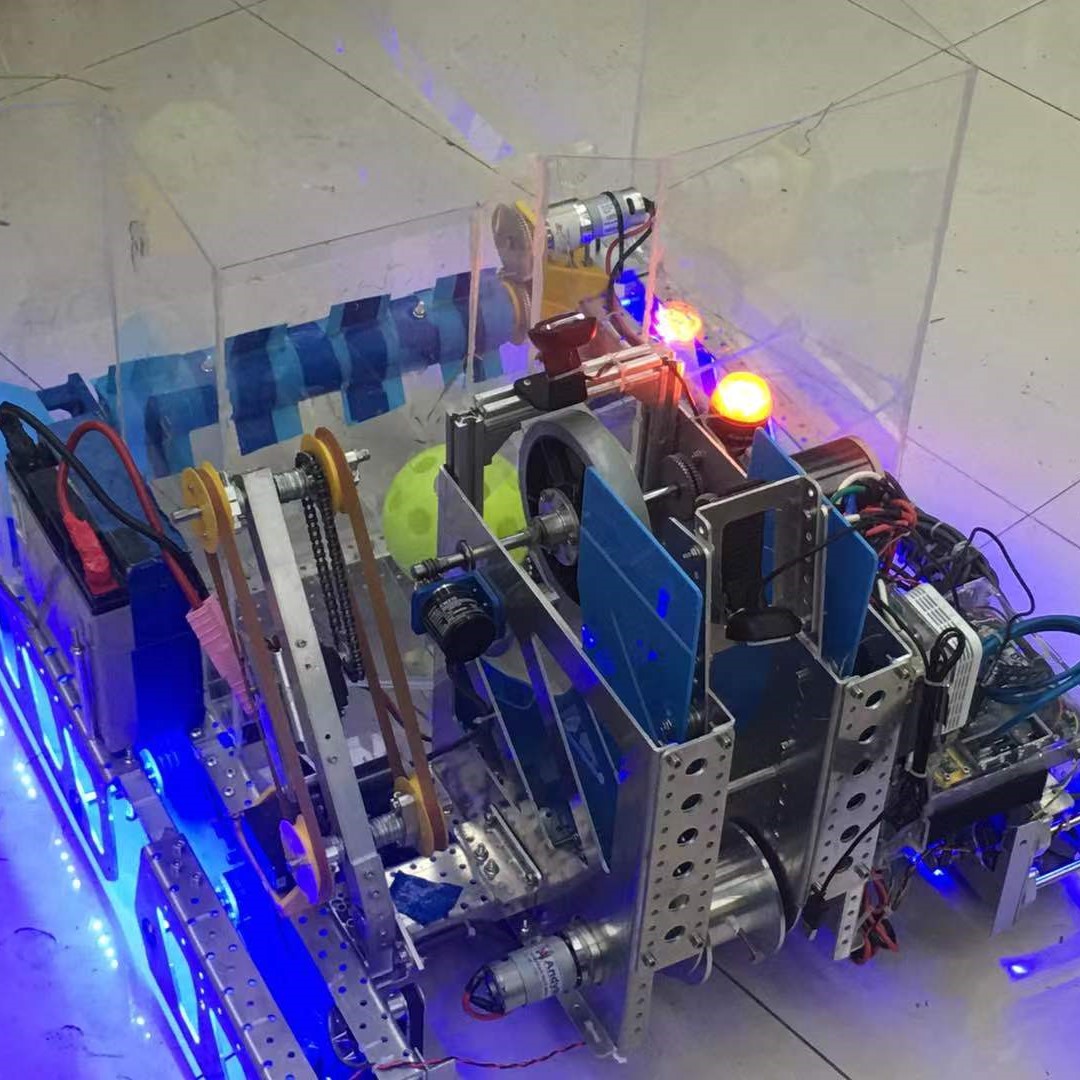

Our FTC 2016 robot, capable of collecting balls and launch them to a basket. I added acceleration/deceleration sequence to the autonomous code to avoid slipping during the autonomous period.

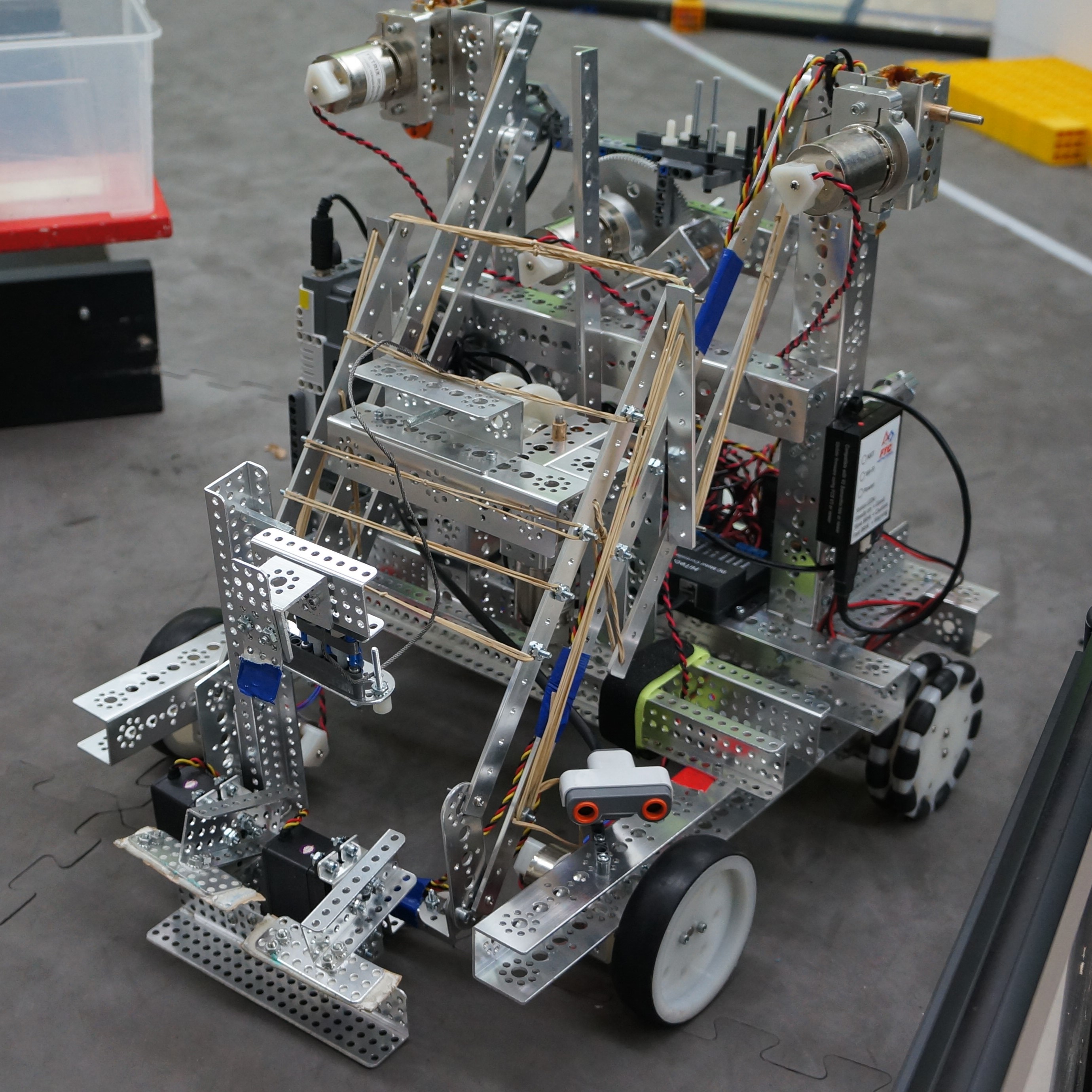

Our FTC 2015 robot. The game requires robots to collect and deliver balls and cubes, then climb up a challenging ramp during the endgame

Our FTC 2014 robot. The game requires robots to collect balls on the ground and load them to a vertical tube. Unlike other teams that collect-raise-dump, we designed our robot to launch the balls up and guided directly into the tubes!

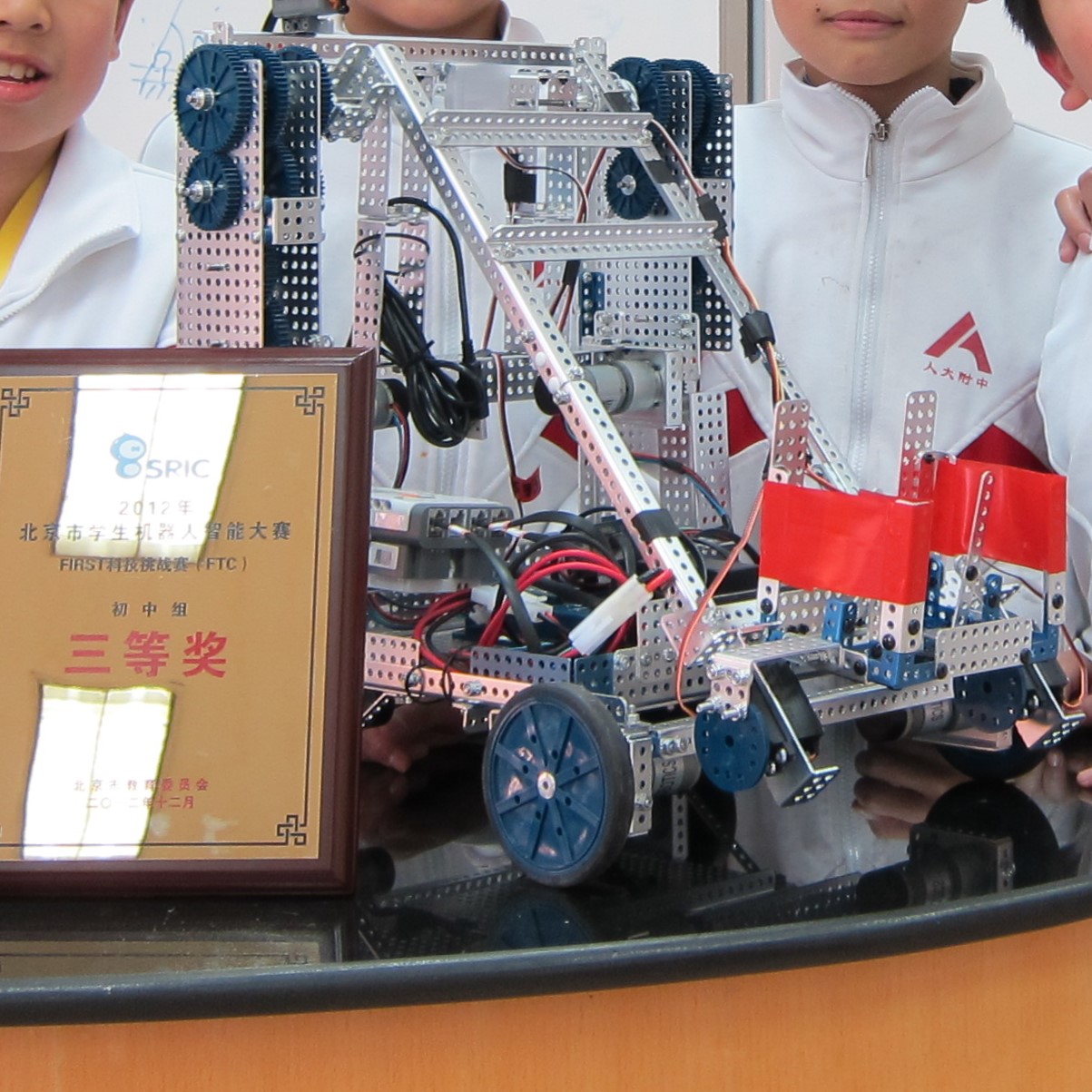

The first metal robot that I worked extensively! I am the programmer and learned to use encoders. Made several autonomous scripts and finally we got to National competition.

First ever metal robot that I worked with. Learned to use new hardwares such as servos. (then burned the servos the night before competition, sad).